|

Liangze Jiang I am a PhD student at EPFL, advised by Damien Teney and Caglar Gulcehre. I am also a Research Assistant at Idiap Research Institute. Previously, I received my MSc from EPFL and was a Student Researcher at Google Research. I obtained my Bachelor’s degree from University of Electronic Science and Technology of China. My research interests lie in empirically understanding and improving generalization and reasoning, particularly how models generalize and extrapolate beyond their training distributions. This includes, for example:

I'm always open to discussions and potential collaborations -- Feel free to drop me an email if you'd like to connect! Email / Google Scholar / Twitter / Github |

|

Publications(* denotes equal contribution) |

|

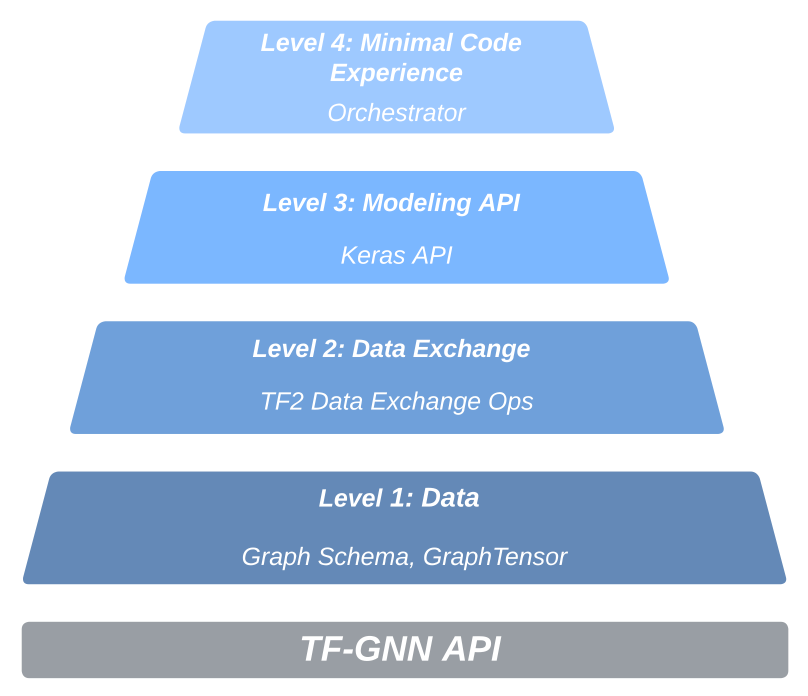

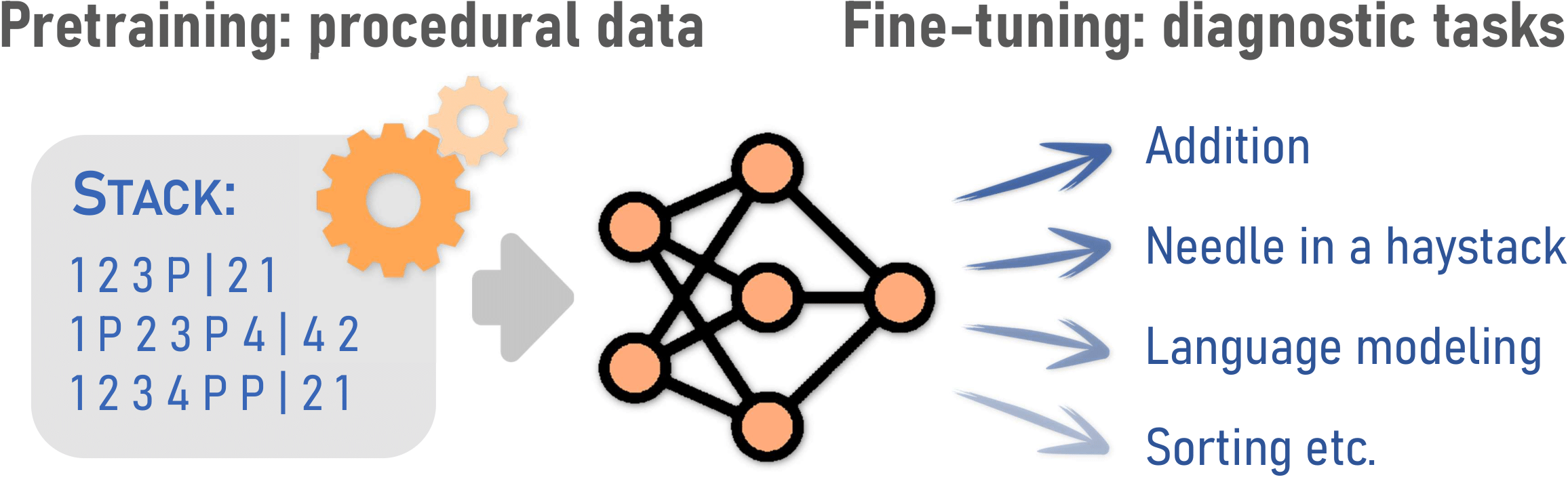

Transformers Pretrained on Procedural Data Contain Modular Structures for

Algorithmic Reasoning

Zachary Shinnick, Liangze Jiang, Hemanth Saratchandran, Anton van den Hengel, Damien Teney MOSS@ICML, 2025 arXiv / Github What specific capabilities can simple synthetic, semantic-free data instill into a model, where do these capabilities reside in the architecture, and how do they manifest within its weights? |

|

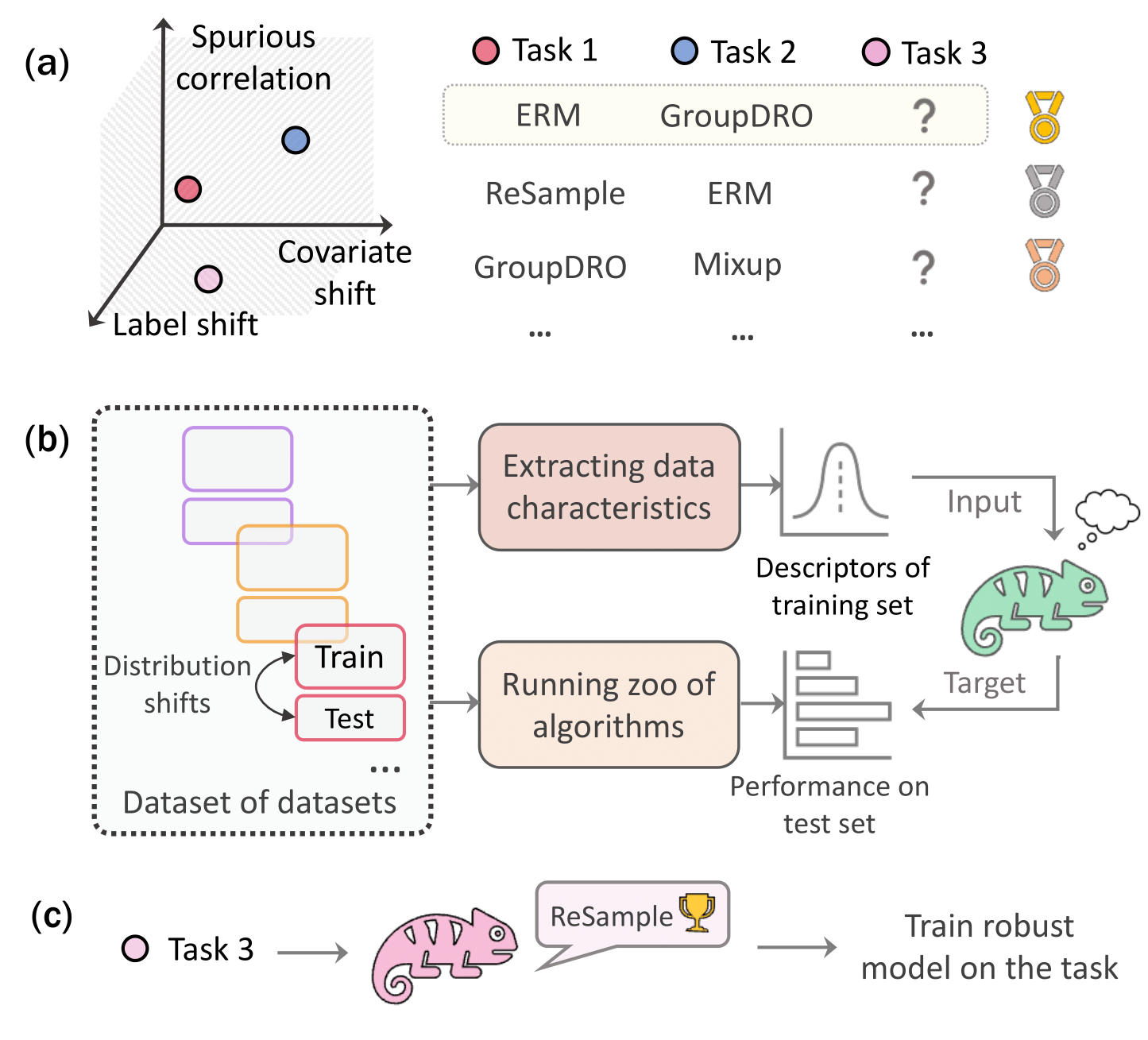

OOD-Chameleon: Is Algorithm Selection for OOD Generalization

Learnable?

Liangze Jiang, Damien Teney ICML, 2025 OpenReview / arXiv / Github The bitter lesson in OOD generalization is that no single algorithm can address all distribution shifts. Can we learn to predict a priori the right algorithm for a dataset, given some of its measurable properties, even when multiple distribution shifts appear simultaneously? |

|

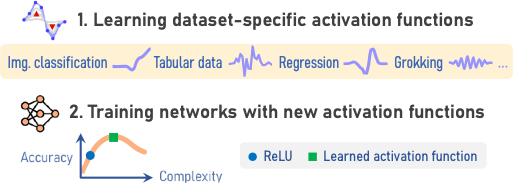

Do We Always Need the Simplicity Bias? Looking for Optimal Inductive Biases

in the Wild

Damien Teney, Liangze Jiang, Florin Gogianu, Ehsan Abbasnejad CVPR, 2025 (Oral) arXiv We modulate the inductive bias of neural architectures by meta-learning novel activation functions that improve generalization. With this approach, we identify diverse tasks where the simplicity bias of ReLU architectures is suboptimal. |

|

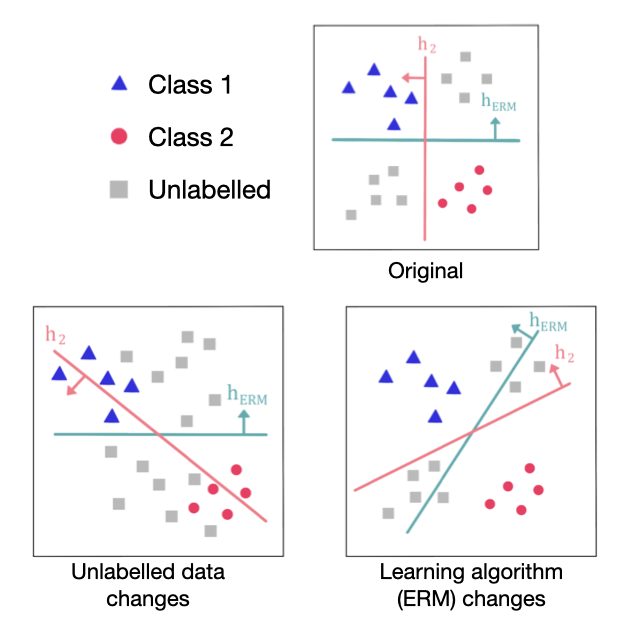

Unraveling the Key Components of OOD Generalization via

Diversification

Harold Benoit*, Liangze Jiang*, Andrei Atanov*, Oğuzhan Fatih Kar, Mattia Rigotti, Amir Zamir ICLR, 2024 OpenReview / arXiv We reveal the crucial co-dependence of training data, algorithm and architectural inductive bias in diversification methods, which were shown to achieve state-of-the-art in OOD generalization (e.g. spurious correlations). |

|

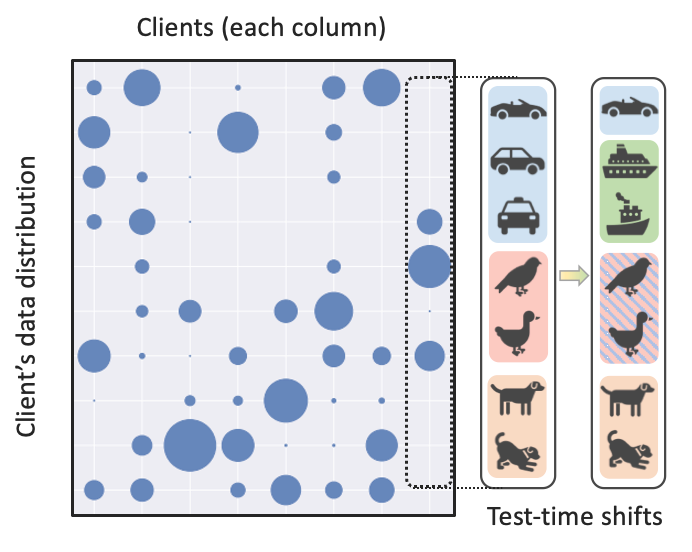

Test-Time Robust Personalization for Federated Learning

Liangze Jiang*, Tao Lin* ICLR, 2023 OpenReview / arXiv / Github We show that existing personalized FL algorithms are not robust to local distribution shifts. We then propose FedTHE that adaptively ensembles the personalized and global model at test-time. It is shown to be robust to diverse distribution shift types. |

Honors & Awards

|

Teaching & Academic Service

|

MiscMy Chinese name is 姜良泽, pronounced as "Jiang Liangze" in Pinyin. |

|

Last updated in July 2025. Template is here. |